The AR Tour Experience project was a strategic initiative to adapt the Big Bus Tours model for Savannah by downsizing the operation and focusing on personalized tour groups of 4-6 people. To address the critical lack of user engagement, we engineered a gamified AR tour using advanced gesture technology to create a seamless interactive experience. The core deliverable was a high-fidelity prototype, built and ready for usability testing within a single week, which allowed us to gather valuable insights and validate the feasibility of a novel, immersive premium tour product.

I owned the visual design, technical execution, and user validation for the AR concept, rapidly prototyping the high-fidelity demo and leading all subsequent usability testing.

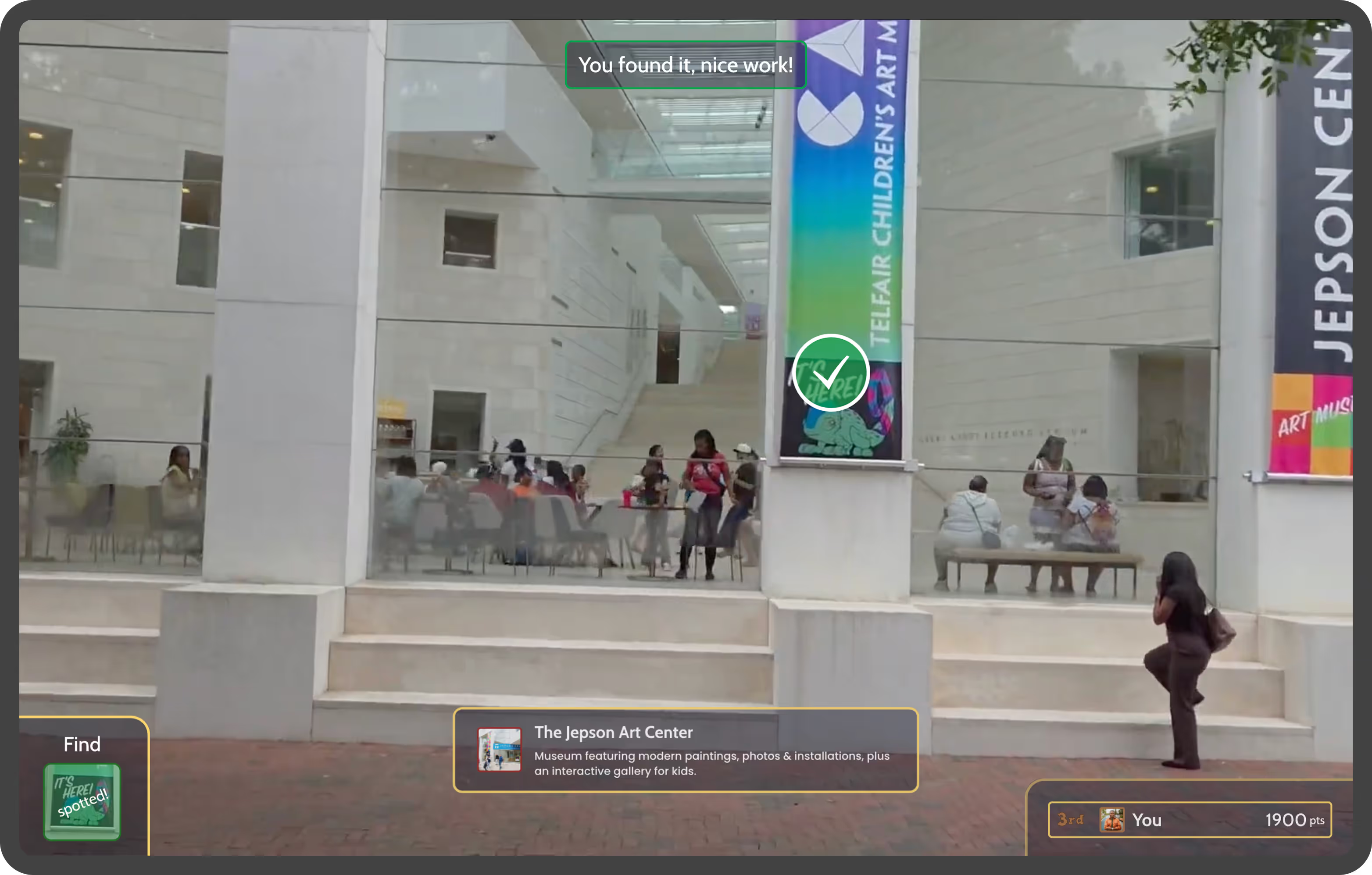

We engineered an 'I Spy'-inspired gamified AR experience using transparent MicroLED display windows to overlay instantaneous, responsive visual prompts. This ensured continuous active engagement and alignment with the tour guide's narrative.

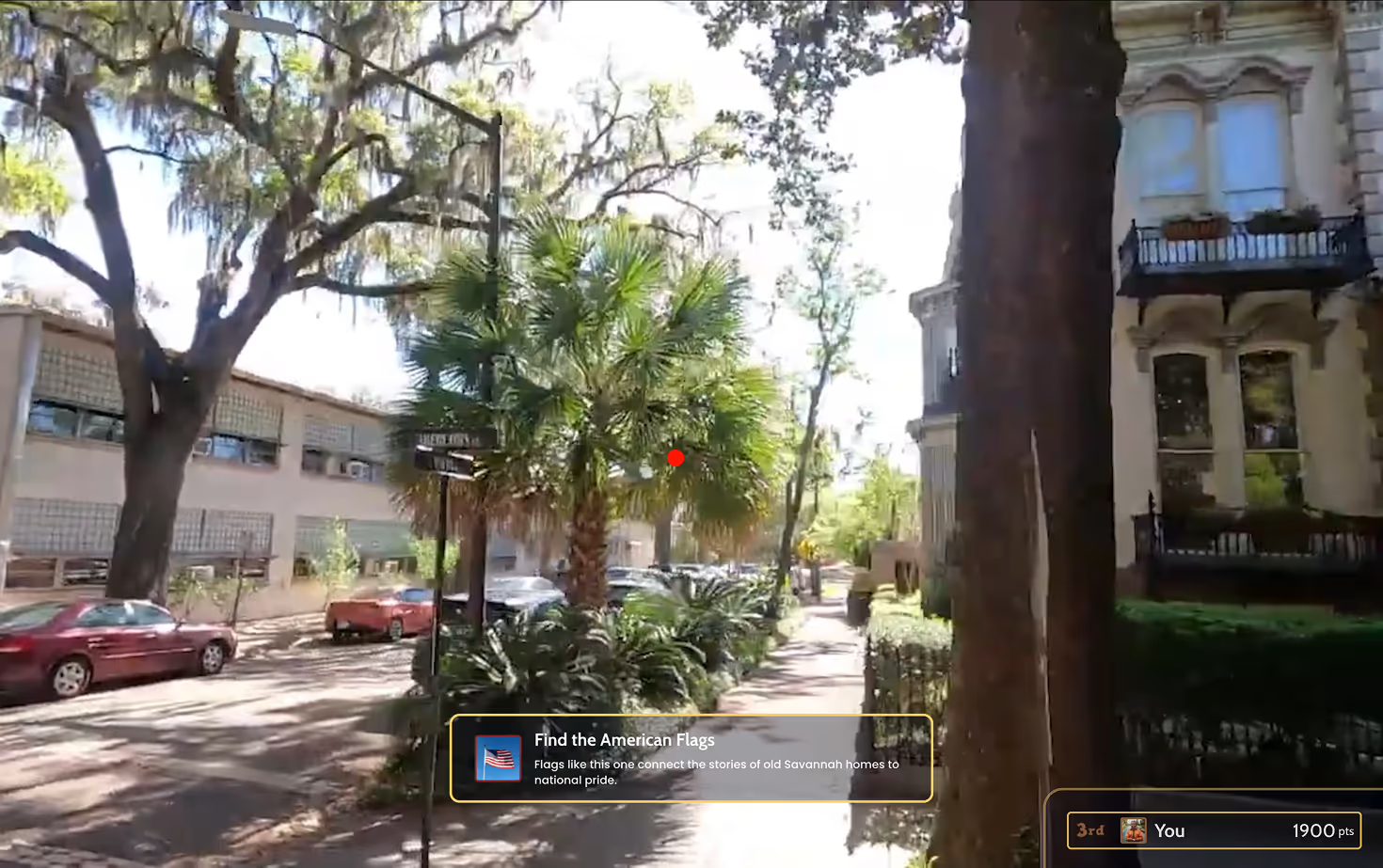

This game type involves presenting a real-time visual prompt that requires the tourist to quickly locate a specific object in the environment using the AR window interface.

This task involves the user matching a cropped, contextual image preview displayed in the interface with the full real-world structure it represents, demanding visual pattern recognition.

The Quicktime Event is a high-intensity task requiring the user to rapidly identify and select multiple objects from the environment within a short, constrained time window, testing reaction speed.

This passive minigame maintains engagement during lulls between attractions, requiring the user to locate repeated common objects over an extended time period to accumulate bonus points.

We utilized eye and gesture tracking to validate successful object recognition, displaying contextual information on the windows. This immediately informed the system of a user's prompt recognition and sustained game progression.

We focused on optimizing Big Bus's existing, underutilized sprinter van asset, selecting this model for its efficiency in tight urban environments. This strategic shift addressed city congestion while ensuring personalized comfort and preventing small tourist groups from fragmenting due to overcrowding.

We integrated Light Control Glass (LCG) for sun management and contrast, leveraging eye-tracking data to dynamically control the UI's size and opacity. This design actively mitigated visual clutter and precisely directed user focus.

(Hover over each element)

For accelerated concept validation, I engineered a functionally accurate prototype within a single week using HTML/CSS/JS. I resourcefully leveraged Mac OS's native head and eyebrow tracking to simulate the necessary eye and gesture inputs, successfully validating the core interactive experience for immediate user testing.

Solid preliminary research was essential for defining the true problem. Specifically, our findings on tourist disengagement and the constraints of the Savannah urban environment drove the shift toward a personalized, gamified AR solution.

Moving beyond standard design tools like Figma, the code-based functional prototype enhanced our usability tests. This elevated fidelity immediately led to richer, more actionable user insights, validating the core interaction model quickly and effectively.

Designing for AR demanded innovative UI management. I pioneered a system using gaze-tracking data to dynamically scale and reveal interface elements by controlling tint, opacity, size and blur, to maintain immersion in the real-world view.